The Most Accurate LLM for AI Agents.

TheAgenticAI uses a mixture of open-source models, combined with online reinforcement learning to deliver exceptional accuracy for Agentic AI Workflows.

Multi-Step Reasoning

Long-Context

Function-Calling

accuracy

20% - 25%

More Accurate

than Claude3.5-Sonnet or GPT4

Outperforms Claude 3.5 Sonnet/Opus and GPT-4 by a mile.

No API changes required.

On complex agentic reasoning tasks, combining multi-step workflows with function-calling, code generation and structured outputs.

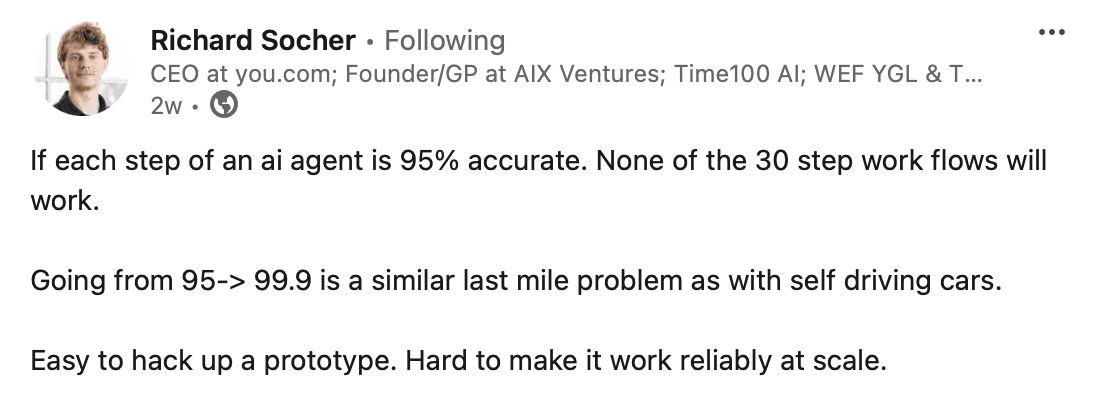

What Problem are We Solving?

Current SOTA LLMs are only good for simple AI agents involving single-step operations. They fall short on accuracy for moderate to complex reasoning tasks that require multi-step operations.

For single-step operations, models like GPT4 or Claude3.5-Sonnet can get more than 90% accuracy, but that quickly falls to a mere 57-66% range on multi-step reasoning tasks, rendering them highly unreliable.

Let’s be honest, 65-70% accuracy is not something your users would want in production.

TheAgentic.AI Approach

TheAgenticAI's MoA approach combined with online reinforcement learning routinely gets accuracy that is 20% - 25% greater compared to GPT4 or Claude3.5 on multi-step reasoning tasks,

and the best part is–

It involves no modification to your existing pipelines.

Available via OpenAI SDK compatible API

We Have Numbers Backing Our Theory

We’ve put our claims to the test by evaluating GPT-4, Claude 3.5 Sonnet, and TheAgenticAI Ensemble on the T-Eval Test— a comprehensive benchmark designed to assess the reasoning and tool-calling capabilities of LLMs across complex domains such as structuring, planning, and multi-step reasoning.

Source: T-Eval Test Benchmark

Benchmark Results: Reason-Retrieve-Understand Task

Thought (Score for Chain of Thought)

Measures: The quality of reasoning in function-calling tasks.

Interpretation: A higher score indicates more coherent and logical decision-making.

Name (Score for Function Name)

Measures: Accuracy in identifying and selecting the correct function.

Interpretation: A higher score signifies greater precision in choosing the right function.

Arguments (Score for Function Arguments)

Measures: Accuracy in passing correct arguments to the selected functions.

Interpretation: A higher score reflects more accurate and appropriate input handling.

Results Breakdown:

GPT-4

Out of 100 input sequences, GPT-4 correctly reasoned the chain of thought in about 57 cases.

For tool-calling, it identified the correct function 73% of the time (73 out of 100 cases). It provided the correct arguments to only 64% of the correctly identified functions (46 out of 73 sequences).

In total, its tool-calling was successful for about 46 sequences out of 100.

TheAgenticAI Ensemble

The model demonstrated superior performance, correctly reasoning for 77 out of 100 cases.

It could identify the correct function to call 92% of the time (92 out of 100 cases), and provided the correct arguments for 87% of these 92 cases.

Thereby, making accurate function calls in about 80 out of 100 instances.

Its reasoning and tool-calling abilities significantly outperformed the current state-of-the-art (SOTA) models.

Conclusion

Even without domain-specific fine-tuning, TheAgenticAI already surpasses leading models like GPT-4 and Claude 3.5 Sonnet. With additional fine-tuning, it promises to achieve even greater accuracy, making it the ideal choice for sophisticated AI agents.

Attains greater accuracy with domain-specific fine-tuning.

Where TheAgenticAI’s Approach Shines?

Single-step Reasoning | complex output

Single-step Reasoning | Simple output

Multi-step Reasoning | simple output

Multi-step Reasoning | complex output